Talk:Boltzmann distribution

| This It is of interest to the following WikiProjects: | |||||||||||||||||||||||||||||||

| |||||||||||||||||||||||||||||||

Everyone has over-complicated things

[edit]Everyone has over-complicated things, at least in the introduction, by going way into applications. In reality, a Boltzmann probability distribution is purely a normalized mathematical function that doesn't have anything to do with the applications. Why not express the Boltzmann probability distribution (and/or Boltzmann probability density) quite simply at first, as a mathematical function. This would be good for the General Reader, a novice, someone who doesn't know much about physics at all. (You people are too busy discussing - or arguing about - things like Classical Mechanics and Quantum Mechanics that the general reader probably doesn't know anything about.) The key notion is "KISS" = "Keep It Simple, Stupid", at least in the beginning, for the General Reader.

To give a specific example, the Gaussian distribution (or Gaussian density) is expressed mathematically as f(x) = Kexp(-ax^2) where the K is a normalizing constant, and "a" is an arbitrary positive constant. This has nothing to do with the physical application; and in fact, it can be used in thousands of different applications.

Likewise, for x greater than or equal to zero, there are many other probability densities that can be considered and applied, where K is a different normalizing constant for each one:

f(x) = Kexp(-ax); f(x) = Kxexp(-ax); f(x) = Kxexp(-ax^2); f(x) = K(x^2)exp(-ax^2); and so forth. These distributions (densities) have various names, such as the Rayleigh distribution; the Boltzman distribution; the exponential distribution; and so forth. These can be applied in many other fields than in physics, statistical mechanics, etc.

Once again, to summarize, the Boltzmann distribution should be explained in simple mathematical terms first - before moving on into all of the complications of the applications in physics - and with the recognition that the Boltzmann distribution can conceivably be used in entirely different realms of probability and statistics. 98.67.174.68 (talk) 17:02, 21 June 2009 (UTC)

- See the Wikipedia article on the Rayleigh Distribution: http://en.wikipedia.org/wiki/Rayleigh_distribution

For a much more clear expositon of the mathematical properties of a Distribution, with the physics explained elsewhere.98.67.174.68 (talk) 17:11, 21 June 2009 (UTC)

This is a terrible encyclopedia entry. It is relevant only for those familiar with physics, and expresses itself mathematically. An encyclopedia should explain to beginning laypeople what something means, and then it can include summaries of specialists. Looking at this entry, I have no idea what it means. What does it mean -- not in gobbleegook equations, but in simple English? — Preceding unsigned comment added by 67.49.42.9 (talk) 12:05, 13 September 2012 (UTC)

Cut from Maxwell-Boltzmann distribution

[edit]- Also it may be expressed as a discrete distribution over a number of discrete energy levels, or as a continuous distribution over a continuum of energy levels.

- The Maxwell-Boltzmann distribution can be derived using statistical mechanics (see the derivation of the partition function). As an energy distribution, it corresponds to the most probable energy distribution, in a collisionally-dominated system consisting of a large number of non-interacting particles in which quantum effects are negligible.

Re exponential decay..

[edit](relevant to user jheald) I've read your comments and added some more of my own (see below) You mention that the equation I supplied below might/should become like the Boltzmann distribution when there is infinite energy - One way I can get it to resemble the Boltzmann distribution is to take the probability of any given particle having energy (n) OR MORE this is the same as the probability that any given particle has the n th energy level filled with an energy quanta. The equation for this would be: probality that n th energy level is occupied =

sum from x=n to x=q (q is the maximum energy) of p(x)

There fore using this (the above equation) does give a drop off in probability as n increases but the shape of the curve is not the same as inverse exponential (at least when q the total energy is finite)HappyVR 19:40, 12 February 2006 (UTC)

Difficult Subject

[edit]Difficult Subject but...

A correct equation is 'hard' to derive but making an assumption of quantized energy levels equally spaced it is easily shown that for total energy quanta q distributed randomly over N particles/atoms the probability that any given particle/atom has n energy quanta is:

( (1/N)^n x ((N-1)/N))^(q-n) x q! )/(n! x (q-n)!) (taking 0! to equal 1 instead of

using the longer form (x+1)!/(x+1) )

This gives the correct distribution - which shows a maxima in the energy distribution ( a hump ) as opposed to the exponential decay given by the incorrectly derived partition function. HappyVR 23:12, 10 February 2006 (UTC)

- That's all very interesting. But the Boltzmann distribution is the probability distribution for the system at a fixed, sharply defined temperature; for example, the distribution occurring if the system could exchange energy with an infinite heat reservoir; i.e. a canonical distribution.

- In your example there is no infinite heat reservoir, the temperature is not sharply defined, it's not a canonical distribution, so ... surprise, surprise, it's not the Boltzmann distribution.

- I'm somewhat at a loss as to what it is you think your example is supposed to show that's relevant to this article. -- Jheald 22:07, 11 February 2006 (UTC).

- Thanks for your interest. However the above equation also only applies at a fixed temperature i.e. at a fixed total energy (to quote 'a closed thermally isolated system')- so I will ignore your second point. In the above equation q gives the total energy.

- —No; this is exactly the point. A system with fixed total energy (ie in a microcanonical situation) does not have a single fixed well-defined temperature. Statistically it has a spread-out / fuzzy / not well-defined temperature. It is only in the limit of such a system having an infinite energy content that each sub-system can be considered to be in equilibrium with an infinite heat bath, and the system and all its parts can be considered to have a single well-defined temperature.

- If the energy content is fixed, but not (reasonably near to being) infinite, then you can't consider the system to have a well-defined single temperature, so you can't expect a Boltzmann distribution. -- Jheald 03:17, 12 February 2006 (UTC).

- Thanks again. I agree that as a statistical treament it may seem that the situation is 'fuzzy' or 'spread out' - but if you consider all the possibilities of this system (ie N particles q energy) i.e. each possible distribution of energy - and sum them giving an average distribution of energy - this gives a well defined partition function - as a above - I honestly don't fully understand you point about infinite energy - using a v.simple analogy - a cup of tea - at a given moment the tea has a given temperature - now the tea could theoretically be totally isolated from its surroundings ie very good insulation - therefore not in contact with an infintite heat resevoir at all - and not having infinite energy in its own system either - but still having a well defined temperature (measured). Is their a fundamental difference between measured temp and the theorectical temp?HappyVR 18:24, 12 February 2006 (UTC)

- The point I was making was that for a random distribution of energy amongst a number of particles-

- The distribution of - the number of particles with a given energy (eg molecules with total energy n) vs the energy (n in the above example)

- is different from

- The distribution of - the relative occupancy of an energy level of enegy Ei (in the text of the article) vs the energy (Ei in the text of the article)

- Therefore I would change the first line of this article from

In physics, the Boltzmann distribution predicts the distribution function for the fractional number of particles Ni / N occupying a state i with energy Ei:

- to

In physics, the Boltzmann distribution predicts the distribution function for the fractional number of particles Ni / N with state i of energy Ei occupied to be:

- (followed by rest of text)

- (however I am not sure if the following equations in the text absolutely match this discription.)

- As I see it the current text describes a distribution similar to the equation I gave above. Perhaps the text should/could be clearer?

- —Fair point. I've made some changes to some of the first two paragraphs, and I hope this is now clearer. -- Jheald 03:43, 12 February 2006 (UTC).

- Also further on in the article it states:

Alternatively, for a single system at a well-defined temperature, it gives the probability that the system is in the specified state.

- Should this not read (for example):

Alternatively, for a single system (of particles) at a well-defined temperature, it gives the probability that one particle of the system is in the specified state.

- —No. The word 'alternatively' is supposed to quietly put the reader on alert, that we're moving away from Boltzmann's picture of the frequencies of energies of single particles, to Gibbs's picture of probabilities for the energy of an entire thermodynamic system (in energy equilibrium with its surroundings). -- Jheald 03:43, 12 February 2006 (UTC).

- I feel that in its current state the text does not adequately describe the situation descibed in the given equations. That is the text and equations are at odds. The equation I supplied was one matching the situation given in the first line of the text. Albeit a simplified expression - equally spaced energy levels are clearly assumed.HappyVR 22:53, 11 February 2006 (UTC)

- —Yes. I hope between us the text is now clearer, and it's clearer that your equation does not reflect the underlying physical set-up required for a Boltzmann distribution.

- It's only in the limit that the total energy in your set-up tends to infinity that one would expect to start finding Boltzmann-like distributions. And I think this is what happens - your 'hump' energy tends to zero; and the fall-off of probability above the hump becomes closer and closer to exponential. -- Jheald 03:43, 12 February 2006 (UTC).

- Unfornately my distribution does not appear to do this - the hump tends to move up in energy as total energy increases - however I will check more carefully... However this page is about the Boltzmann distribution.

- However if is fair to say that beyond the hump (the peak probability) the fall off is similar to exponential decay.

- I have put a more important point below:

(Note that in my supplied equation - the energy is assumed to be discretely quantised - if the size of the quanta decreases - giving more energy quanta the shape of the distribution stays the same but becomes smoother. At infintitely small quanta size there are infinite quanta but not of course infinite energy - this info probably not relevant?)

- Thanks for your changes - I'm still reading the text very slowly and carefully but I assume it is clearer now.Thanks again. However your comment above about the system needing to have infinite energy has intrigued me - is it that you are saying that the boltzmann distribution only applies in this case if so I must look at other pages - surely this feature should be stated VERY CLEARLY in say - pages relating to derivation or use of the Boltzmann distribution - in my experience this necessesity is not stated at all...

I assumed that the boltzmann distr. would apply to isolated systems of finite energy.HappyVR 18:24, 12 February 2006 (UTC)

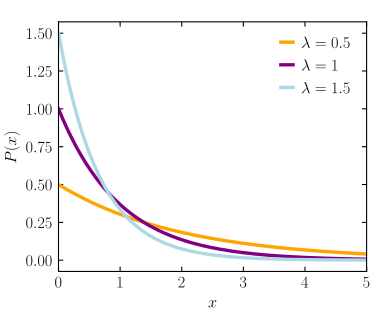

Illustration

[edit]If a picture paints a thousand words... Could someone find a graph to illustrate the text. It makes it much easier to visualise. Thanks.--King Hildebrand 19:06, 5 August 2006 (UTC)

Notation

[edit]What does E' mean in the probability distribution near the bottom? Is it the derivative of E? With respect to what? Is it the total energy? --aciel 22:52, 2 April 2007 (UTC)

- I think it's just a dummy energy variable to integrate over. Dicklyon 23:53, 2 April 2007 (UTC)

individual particle or whole microstate?

[edit]Feynman's "Lectures on Physics" and some non-wikipedia web sources ([1], [2]) state that the Boltzmann distribution applies to the probability distribution for a single particle within the system. With some interpretation, this article states the same thing in its first definition. But it is also stated here:

Alternatively, for a single system at a well-defined temperature, it gives the probability that the system is in the specified state.

[3] similarly states, in the proof box,

A system with a well-defined temperature, i.e., one in thermal equilibrium with a thermal reservoir, has a probability of being in a microstate i given by Boltzmann's distribution.

Please give a citation for this if it is in fact true. In particular, how could the distribution for the whole (classical) system have a nonzero probability for any microstate whose energy is different from the actual, constant energy of the system? Halberdo (talk) 05:27, 22 December 2008 (UTC)

- The Boltzmann distribution conceptually applies for a thermodynamic system in equilibrium with a heat bath, with which the system can exchange energy. It applies if the temperature is well defined. (Nailing down the energy defines a different distribution, called the microcanonical distribution).

- So, why does that produce sensible results, both for a single particle (sometimes), and for a macroscopic "classical-sized" system?

- For a single particle, we are making the following conceptual steps if we apply the Boltzmann distribution:

- (1) We are taking the single particle to be our thermodynamic system of interest, the rest of the particles being the heat-bath with which it can exchange energy;

- (2) We are assuming that, at least to a very good approximation, we can treat our particle as independent of the rest of the system (except for short sharp collisions) - so that it can be ascribed its own energy, there is no significant interaction energy apart from the short times when the collisions are happening; we are treating the particle as if (again, to a high degree of accuracy) it can be ascribed its own quantum state, independent of the state of anything else.

- These are the prerequisites for being able to treat the particle as a thermodynamic system in its own right, which is the starting point for deriving the Boltzmann distribution. It is worth noting that while these conditions may be met for a particle in an ideal gas, they are only approximations for particle in real systems, such as real gases, liquids or solids, where one may not be able to ignore interaction energy shared between different particles, so one may not reliably be able to treat a particle as a separate independent sub-system. In such cases, one has eventually to consider the statistical mechanics of all the particles as a whole -- the simple approximation of being able to treat each particle independently breaks down; such systems may even do things that would appear to break the second law, if the calculations have ignored the correlations.

- So, the reason we can often apply the Boltzmann distribution to single particles is that often we can treat the single particles as individual thermodynamic systems (to a good enough level of approximation).

- For a macroscopic system, the probability of a particular energy is given by p(E) ~ g(E) e– (E / kT), where g(E) is the multiplicity of states at a particular energy, and the Boltzmann factor gives the relative probability of the system being in any one of those states. For energies above the average energy of a macroscopic system, the Boltzmann factor "wins", and because E >>> k T, the exponential in the Boltzmann factor for the whole system falls off very quickly indeed as a function of energy. In effect, it locks the system to within a few k T of the average energy - less than a drop in the ocean.

- Wouldn't the non-physical result that that E <<< k T be even more likely? What in that formula forces E to be at all close to the average energy?Halberdo (talk) 13:44, 22 December 2008 (UTC)

- Well, for an ideal gas of N molecules, the reason the average energy of the system is so big is that the number of states g(E) increases ~ E3N - 1, and N may be of the order of 1023. That is enough for g to comfortably outgrow the exponential at low and medium energies. It is only when you reach high enough energies, much larger than kT, that the exponential can finally "win".

- In fact, if you do the calculation properly, you can show that the average total energy ~ (3/2) Nkt (which should be reassuringly familiar), with its standard deviation going as N1/2kT. When N~1023, that (N1/2 / N) factor makes for a very small fractional uncertainty in the energy indeed. Jheald (talk) 23:05, 22 December 2008 (UTC)

- I removed the [citation needed] and added an external link to a derivation for whole microstates. I'm undecided whether this article should include that derivation, or a derivation for individual particle distribution, or remain as it is.Halberdo (talk) 03:01, 23 December 2008 (UTC)

- Wouldn't the non-physical result that that E <<< k T be even more likely? What in that formula forces E to be at all close to the average energy?Halberdo (talk) 13:44, 22 December 2008 (UTC)

- The probability for the energy distribution turns into something very like a very sharp spike - only the macroscopic "classical" energy is allowed. For a big enough system, the result of the Boltzmann distribution (which assumes the system can exchange energy with its surroundings) becomes for all practical purposes identical with the result of the microcanonical distribution (which assumes it can't). Even though the macroscopic system can exchange energy with its surroundings, for a big enough system the net average fluctuation that possibility produces becomes negligible compared to its average energy.

- So the Boltzmann distribution does give sensible results for macroscopic systems, too.

- Hope that makes sense and helps. All best, Jheald (talk) 09:41, 22 December 2008 (UTC)

- References: two books I happen to have on shelf are Tolman, The Principles of Statistical Mechanics (1938; Dover, 1979), pp. 58-59; and Waldram, The Theory of Thermodynamics (Cambridge University Press, 1985), pp. 30-35, which gives quite a nice discussion. But if you look up "canonical distribution" or "canonical ensemble" in any standard introductory textbook on statistical mechanics, you should find quite a similar explanation. Jheald (talk) 10:55, 22 December 2008 (UTC)

thermal equilibrium

[edit]The basic assumption of thermal equilibrium, which is a requirement for this distribution to hold, appears to be missing from the article. — Preceding unsigned comment added by 192.249.47.174 (talk) 19:19, 12 March 2012 (UTC)

- You mean the fact the Boltzmann distribution indicates that atoms in thermal equilibrium are more likely to be in states with lower energies? --Luke R001 (talk) 10:44, 7 April 2012 (UTC)Luke R001

Identical to Maxwell–Boltzmann statistics?

[edit]It appears to me that this describes concepts exactly identical to Maxwell–Boltzmann statistics. Am I missing something, or should these be merged? --Nanite (talk) 07:51, 7 August 2013 (UTC)

- Well, since nobody answered I'll answer for myself. :) Having been digging around some more it looks like I was certainly wrong that Boltzmann distribution refers to M-B statistics. About half the time it people mean to refer to a canonical ensemble. Anyway, this page would be better replaced with a disambiguation-ish page which points people in the right direction, as the technical content is better covered in the specific articles. I'm removing the merge tags and will soon replace this article with this draft in progress. Nanite (talk) 21:49, 21 January 2014 (UTC)

Inverted Boltzmann Distribution

[edit]This section seems irrelevant. Negative absolute temperatures have been known and observed since the invention of the maser in the 1950's. Either this section needs expansion and the relevance needs to be clarified, or it should be removed. As it is, I see it as someone seeing the phrase "boltzmann distribution" in some popular news of the day, and in their ignorance adding it to the Wikipedia entry. This section is 95% speculation and has no place here, imho. Might just as well claim it could lead to perpetual motion. Its PR Hype.72.172.11.222 (talk) 17:29, 30 September 2013 (UTC)

Done. 131.203.251.94 (talk) 19:47, 14 October 2013 (UTC)

Error in graph

[edit]The rightmost curve in the Boltzmann distribution graph should read 1GeV, not 1eV. https://en.wikipedia.org/wiki/File:Boltzmann_distribution_graph.svg — Preceding unsigned comment added by 80.217.138.131 (talk) 17:30, 29 December 2015 (UTC)

Unlabelled graph

[edit]

I 2nd the notion that this is a bad article. I'm not sure if I understand this plot correctly. Isn't the distribution discrete, why is x continuous? But I am not sure because x and lambda are funnily not defined in the article or the graphs subtitle. --194.39.218.23 (talk) 194.39.218.23 (talk) 12:55, 22 September 2023 (UTC)

- C-Class vital articles

- Wikipedia level-5 vital articles

- Wikipedia vital articles in Physical sciences

- C-Class level-5 vital articles

- Wikipedia level-5 vital articles in Physical sciences

- C-Class vital articles in Physical sciences

- C-Class physics articles

- High-importance physics articles

- C-Class physics articles of High-importance

- C-Class mathematics articles

- Low-priority mathematics articles

- C-Class Statistics articles

- Low-importance Statistics articles

- WikiProject Statistics articles